The Robots.txt file is a critical tool for webmasters to control search engine crawling, enabling them to optimize site performance and user experience via Core Web Vitals (CWV) strategies. By configuring the file, owners can direct bots to access essential content while blocking low-quality pages, aligning with CWV metrics like LCP, FID, and CLS. This approach improves website indexing efficiency, enhances load times, interactivity, and visual stability, ultimately boosting search rankings, reducing bounce rates, and increasing user satisfaction. Regular audits of the Robots.txt file are essential for staying compliant with SEO best practices in a dynamic digital landscape.

Robots.txt Configuration: Unlocking SEO Potential through Smart Web Scraping Management. Discover how this simple yet powerful tool impacts search engine visibility and indexing. We’ll guide you through the process, from understanding the basics of Robots.txt to crafting tailored rules for optimal performance. Learn about Core Web Vitals, their SEO significance, and how to leverage them with effective configuration techniques. Avoid common pitfalls and explore advanced strategies for refining your website’s online presence through this essential SEO practice.

Understanding Robots.txt: A Gateway to Web Scraping and Indexing

Robots.txt is a fundamental component in the realm of web accessibility and search engine optimization (SEO). This simple text file acts as a gateway, controlling how search engine crawlers interact with a website. By providing clear instructions, website owners can direct these automated processes, ensuring specific pages are indexed or blocked from indexing. This delicate balance is crucial for maintaining website integrity while enabling efficient crawling and indexing for search engines.

Web scraping and indexing are critical aspects of modern SEO strategies, especially when aiming for Core Web Vitals Optimization. Robots.txt allows webmasters to tailor the user experience by granting or restricting access to dynamic content. Proper configuration ensures that important pages are discovered and indexed accurately, leading to better search engine rankings. It’s a powerful tool that facilitates the intricate relationship between website owners, search engines, and users alike.

Core Web Vitals and Their Impact on SEO: The Basics

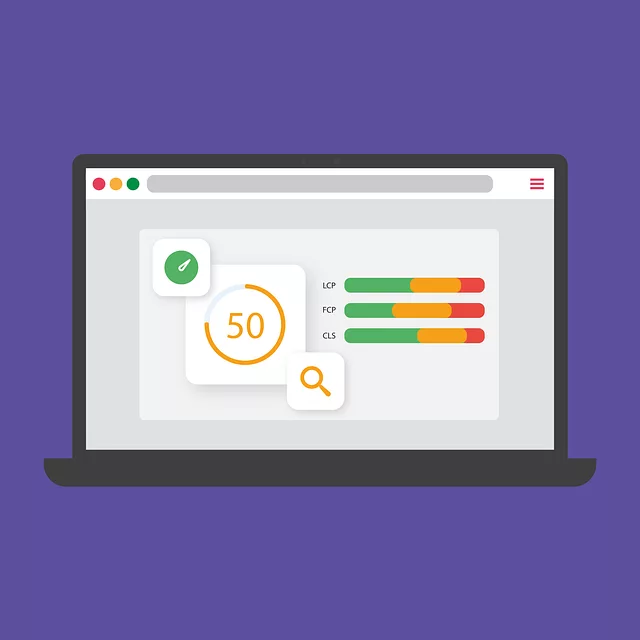

Core Web Vitals are a set of metrics that measure user experience on a website, focusing on loading performance, interactivity, and visual stability. These vitals include Core Interactions, Largest Contentful Paint (LCP), and Cumulative Layout Shift (CLS). Each plays a crucial role in how search engines like Google perceive the quality of a web page.

Optimizing for Core Web Vitals is essential for SEO as it directly impacts site performance and user satisfaction. Faster loading times, seamless interactions, and minimal visual shifts contribute to lower bounce rates and higher engagement, all of which are favored by search algorithms. By implementing Core Web Vitals Optimization, websites can enhance their visibility in search results, attract more organic traffic, and provide a better browsing experience for users.

Optimizing for Core Web Vitals with Robots.txt Configuration

Robots.txt is a powerful tool for website owners to control how search engines crawl and index their content. When it comes to optimizing for Core Web Vitals (CWV), a crucial aspect of modern search engine ranking, this file plays a significant role. By configuring Robots.txt effectively, you can ensure that your site’s most important elements are accessible to search bots while blocking potentially detrimental or low-quality pages.

For CWV optimization, the focus should be on user experience metrics such as Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Robots.txt can be used to block access to pages with poor performance in these areas, preventing search engines from including them in results. This strategic approach ensures that your website’s most visible and valuable content is optimized, contributing to better rankings and enhanced user satisfaction.

Crafting Effective Robots.txt Rules for Better Search Engine Visibility

Common Mistakes to Avoid When Setting Up Robots.txt

When setting up your robots.txt file, it’s crucial to steer clear of some common pitfalls that can hinder your website’s performance and search engine optimization (SEO). One frequently overlooked aspect is the inclusion of too many disallowed pages. While restricting access for crawlers is essential for certain sensitive content, an overly restrictive robots.txt may prevent valuable pages from being indexed, impacting your site’s visibility. Ensure every rule is tailored to specific needs, allowing relevant bots access while blocking others.

Another mistake to avoid is using outdated file versions or failing to test its effectiveness. Regularly check and update your robots.txt to align with your website’s structure changes and SEO strategies. Core Web Vitals Optimization requires a balanced approach where you allow necessary crawls while protecting user privacy and experience. Testing will help identify any issues, ensuring your file directs search engines efficiently without causing negative impacts on your site’s performance or ranking potential.

Advanced Techniques for Refining Your Website's Robots.txt File

In the pursuit of optimal website performance and user experience, especially with the focus on Core Web Vitals, refining your Robots.txt file becomes an often-overlooked yet powerful strategy. This text file acts as a roadmap for search engine bots, dictating which pages they can and cannot access. Advanced techniques involve understanding and leveraging specific directives like `Allow` and `Disallow`. By carefully selecting which paths to grant or restrict access to, webmasters can significantly impact site crawling efficiency.

One such technique is implementing dynamic `User-Agent` rules to cater to different bot behaviors. This allows for fine-grained control, ensuring critical pages are accessible while maintaining privacy or performance safeguards. Additionally, regular audits of the Robots.txt file are essential to keep up with changing website structures and SEO best practices, thereby enhancing overall Core Web Vitals optimization efforts.