The `robots.txt` file is a powerful tool for website optimization, especially in relation to Core Web Vitals Optimization. It allows web owners to control crawler access, balancing discoverability with user experience by dictating which pages are indexed. Core Web Vitals, including Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS), measure page performance indicators like loading speed, interactivity, and visual stability. Optimizing these vitals enhances website health, speeds up loads, improves user satisfaction, and positively impacts SEO rankings. A well-structured `robots.txt` file, free from common mistakes, improves crawl efficiency, reduces duplicate content, and contributes to better user experiences, all vital for Core Web Vitals Optimization. Regular monitoring and updates are essential for effective SEO in a dynamic digital landscape.

Robots.txt Configuration: A Vital SEO Strategy

In today’s digital landscape, search engine optimization (SEO) is paramount for online visibility. One often-overlooked tool in an SEO strategist’s toolkit is the Robots.txt file. This article delves into the intricacies of Robots.txt configuration, a powerful method to guide search engines and enhance website performance. We’ll explore key concepts like Core Web Vitals Optimization, understand its impact on site indexing, and provide a step-by-step guide to crafting an effective strategy while avoiding common pitfalls.

Understanding Robots.txt and Its Role in SEO

Robots.txt is a fundamental tool in website management that plays a crucial role in search engine optimization (SEO). It acts as a communication bridge between web owners and search engine crawlers, dictating which pages or sections of a site should be indexed and which should remain hidden. By configuring this file, website owners can control crawler access, ensuring their sites adhere to specific guidelines and enhancing overall performance.

In the context of Core Web Vitals Optimization, Robots.txt becomes an essential component in balancing discoverability and user experience. It allows webmasters to hide sensitive or dynamic content, preventing unnecessary processing by search engines. This is particularly beneficial when focusing on critical metrics like page load time, interactivity, and visual stability, as it ensures that these Core Web Vitals are measured accurately without external factors.

Core Web Vitals: A Brief Overview

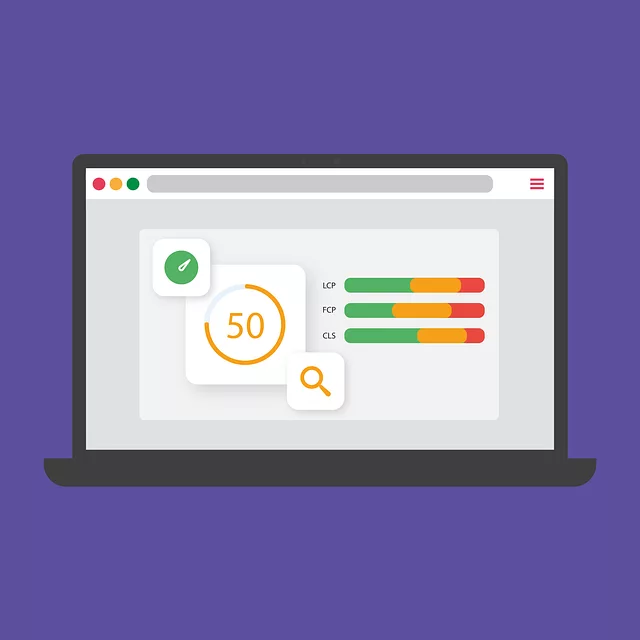

The Core Web Vitals are a set of metrics that measure the user experience on a webpage, focusing on key interactions and visual stability. These vitals include Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). LCP measures the time it takes for the main content to load and become visible; FID assesses how quickly a page responds to user input after loading; and CLS tracks any unexpected layout shifts that might occur while users interact with a page.

Optimizing these Core Web Vitals is crucial for improving website performance and search engine rankings, as Google considers them key indicators of overall web experience. By minimizing LCP, reducing FID, and keeping CLS low, developers can ensure pages load faster, respond to user actions more promptly, and provide a steadier visual layout, thereby enhancing user satisfaction and driving better search engine optimization (SEO) results through Core Web Vitals Optimization.

Why Optimize for Core Web Vitals?

In today’s digital landscape, where user experiences are pivotal for search engine rankings, Core Web Vitals Optimization takes center stage. Core Web Vitals measure key aspects of a webpage’s performance, including load time, interactivity, and visual stability. Optimizing these metrics directly impacts how search engines perceive and rank your site, ultimately affecting visibility and traffic. By focusing on Core Web Vitals, you ensure your website provides a seamless experience to users, encouraging longer visits, lower bounce rates, and improved engagement signals that search algorithms value highly.

This optimization is not just about aesthetics; it’s a strategic move to enhance your website’s health and performance. Search engines like Google have made it clear that user satisfaction is a core factor in their ranking factors. By prioritizing Core Web Vitals, you’re addressing direct user experience indicators, which can lead to better search rankings, increased organic traffic, and ultimately, improved online success.

The Impact of Robots.txt on Website Performance

The `robots.txt` file plays a crucial role in guiding web crawlers and improving website performance, especially when it comes to Core Web Vitals Optimization. By specifying which pages or sections of your site should be indexed or blocked, you can enhance loading times and overall user experience. This is particularly important for dynamic content or sections that are not essential for SEO but may impact the speed at which your page loads.

A well-configured `robots.txt` file ensures that search engine bots focus on the most valuable and relevant pages, leading to better rankings and a higher quality score. Moreover, it helps in avoiding unnecessary data transfer and server load, resulting in faster page render times. This optimization is vital for keeping visitors engaged and reducing bounce rates, thereby improving key metrics that contribute to overall website success.

Crafting an Effective Robots.txt Configuration

Crafting an effective robots.txt configuration is a strategic process that aligns with your site’s goals and search engine optimization (SEO) strategy, especially when considering Core Web Vitals Optimization. This text file acts as a map for web crawlers, guiding them on which pages to access and index. It’s not just about blocking access; it’s an art of balancing accessibility for search engines while protecting sensitive or duplicate content.

For optimal results, focus on clarity and specificity in your robots.txt rules. Define the sections of your website you want crawled and those that should be excluded. Use descriptive terms to ensure search engine bots understand your intent. Remember, a well-structured robots.txt can improve crawl efficiency, enhance site performance by reducing duplicate content, and contribute to a better user experience—all factors that positively impact Core Web Vitals and overall SEO rankings.

Common Mistakes to Avoid During Setup

When configuring a robots.txt file, it’s essential to steer clear of some common pitfalls to ensure optimal website performance and search engine visibility. One frequent mistake is over-blocking, where site owners inadvertently restrict access to crucial pages or resources by setting overly broad rules. This can hinder Core Web Vitals Optimization, as critical data and interactions might be hidden from search engine crawlers.

Another blunder to avoid is neglecting to consider the specific needs of different user agents. Every crawler has unique characteristics, and a one-size-fits-all approach may not accommodate their diverse requirements. Misconfiguration can lead to indexing issues and fragmented data, contradicting the goal of maintaining a well-structured and accessible website.

Monitoring and Updating Your Robots.txt File

Regular monitoring and updates are essential for your Robots.txt file, especially in today’s dynamic digital landscape. This file acts as a crucial guide for search engines, dictating which pages on your website should be crawled and indexed. By keeping it up-to-date, you ensure optimal Core Web Vitals Optimization, ensuring your site remains efficient and user-friendly.

It’s recommended to periodically review your Robots.txt configuration to reflect any changes in your website’s structure or content. As your site evolves, new pages may require access for search engines while others might no longer be relevant. Promptly updating this file prevents issues like blocked crawl requests, which can negatively impact your website’s visibility and performance.